When I concluded my work on DEEP FL0YD, a music visualizer made in the Processing coding language, I identified some limitations that I wanted to address in a future version. However, this version turned out to be more than a simple update or reskin—instead, I aimed to achieve similar aesthetics as before while letting ChatGPT handle most of the programming details. Hello, “FAST FL0YD.”

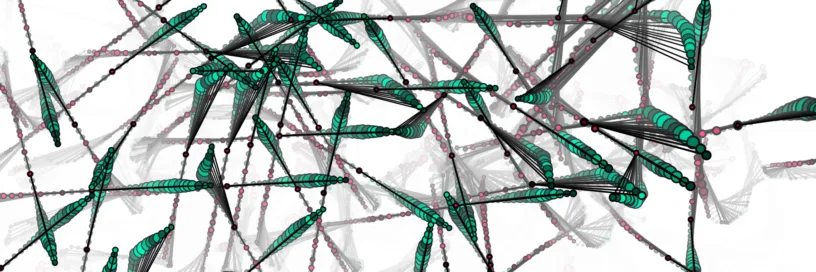

Because an image says more than a thousand words, and a video consists of thousands of images—and also to grab your attention—you can find an example of the visualizer embedded below. During development, I found that Tame Impala works much better than home-recorded progressive indie music, so, for once, I decided to upload a song that is not my own. Be sure to watch in 1440p because any lower resolution will look bad. Also, please install uBlock Origin because YouTube ads are more aggressive than my videos, and I do not monetize, anyway.

Since I already knew what the output was supposed to look like, the visualizer was almost fully functional after one day. Nonetheless, the devil is in the details, so synchronizing audio and visuals took me another full day. So did finding good export settings for the videos. Not to complain, but ChatGPT is still unable to maintain code bases of several hundred or thousand lines, more often than not leading to missing functions, header variables, and much frustration in the process. Out of sheer desperation, I made my own Python tool to quickly combine scripts and copy them into the clipboard to feed the AI just enough information without overwhelming it.

Back to the topic: the most important improvement in FAST FL0YD is real-time capability. Whereas the previous version required extensive pre-processing in the proprietary (and slow) software MATLAB—e.g., importing individual audio multi-tracks to determine their envelopes—the new version relies on Fast Fourier Transform (FFT), which is calculated in real-time and gives FAST FL0YD its very name. Essentially, FFT is used to compute the frequencies of the audio spectrum at a specific time, which are then grouped into bins of one octave each, amounting to a total of eight bins of usable information. In other words, the visualizer constantly reads eight numeric values that slightly change each frame, depending on how much bass, mid-range, and treble are present.

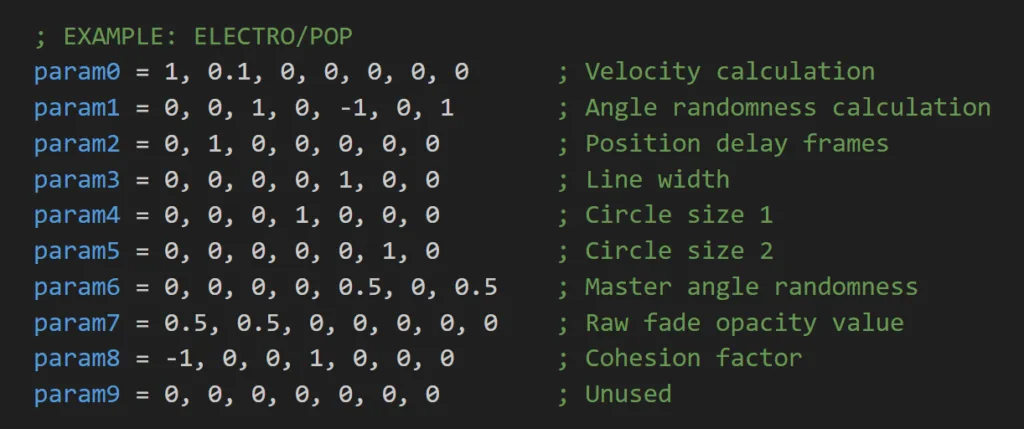

The only question left is how the FFT bin parameters should be mapped to the parameters that control the visuals. These visual parameters include, for example, the velocity of the Floyd particles, their angular randomness, the line width, circle sizes, and some new parameters which I will detail further down. The simple answer: you should do the mapping yourself. I wanted to let the user choose arbitrary linear mappings between input and output parameters without programming a GUI or allowing the user to type mappings as code, potentially enabling bad code injection. Hence, after several unsuccessful and bloated attempts at writing my own text parser in Java, I opted for a good old matrix approach, which you can see in the image below. The columns represent the frequency bin parameters, and the rows represent the visualizer controls. A one means total correlation between the two, while a zero means no correlation at all.

I believe that these mappings are highly song- or genre-specific, and I encourage you to play around with the numbers more than I have thus far. The matrix I made for The Moment (pun intended) is only a starting point for overly compressed pop songs featuring a lot of automated bandpass filters.

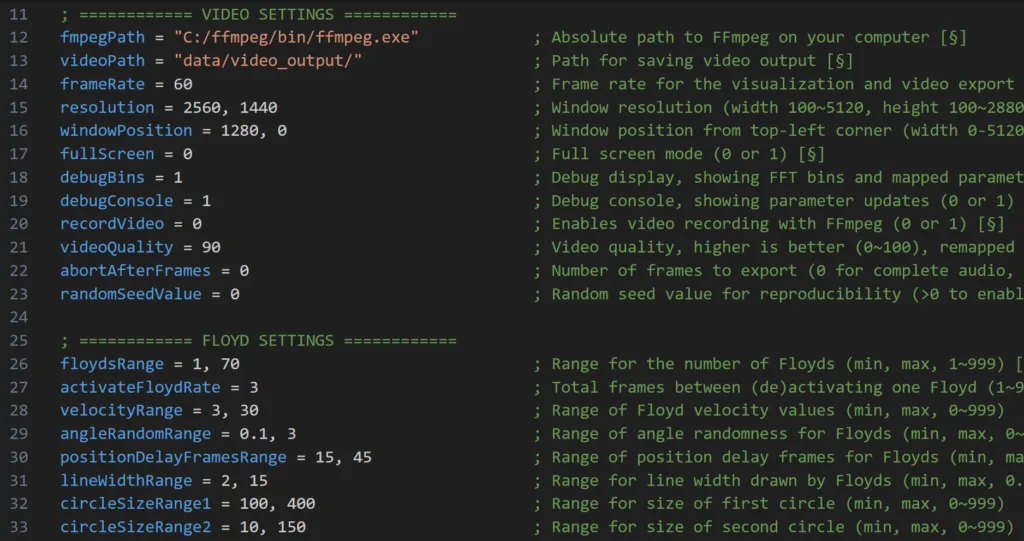

The previous version of the visualizer also had one major drawback: all users had to install the Processing environment first and then change variables in the code header. Naturally, this was an impediment for people without coding experience. Outsourcing all user variables to a separate INI file that updates the visualizer in real-time spared me the tedious programming of a GUI and made the new visualizer much more versatile. This method is also quite robust, limiting all variables to certain ranges or assuming default values if the respective code lines in the INI file are faulty. Here is an excerpt from that file to give you an idea.

Similar to the old version, there is an invisible Master Floyd that randomly roams a portion of the screen. Depending on how high the cohesion value is—another parameter that can be mapped to the FFT bins—the visible Floyds either mass together to pursue their Master or prowl around completely unaffected. The old concept of forcing a limited number of possible directions onto the Floyds also remains, for example, allowing them to move only up, down, left, or right, with some randomness included. Likewise, a rotation bias may be forced onto the Floyds, making them pirouette. Both the lists of directional and angular biases can be changed by the user and are drawn from randomly. For instance, the following line gives the Floyds a 70% chance of no rotational bias, a 10% chance of a counterclockwise rotation, and a 20% chance of a moderate clockwise rotation (be aware that “point before line” does not hold true here):

directionBiasOptions = 7*0, 1*1, 2*-0.5

Two further improvements are linked to the variables positionDelayFramesRange and movingAverageBinFrames. In essence, the Floyd particles are tiny dumbbells consisting of two circles connected by a straight line. In the last version, the two circle positions had to come from consecutive time steps. However, the new version allows a certain delay between the two, specified by the variable positionDelayFramesRange and the corresponding mappings, thus creating feathery, fish-bony structures when the angular randomness is high enough. This enabled the visualizer to show a lot of action without significantly increasing the total number of Floyds.

The other improvement is that the FFT bins may be averaged over several frames, their number specified by movingAverageBinFrames. In science, particularly signal processing, this is called a moving average filter—and God, am I happy to apply the serious stuff I learned in university to my art. If you find that the Floyds lack punch, you can decrease the number of frames to be averaged. Or increase it if the Floyds flicker so fast it gives you seizures (looking at you, Freestyler by Bomfunk MC’s). I found that a good compromise between punchy and smooth is averaging five frames in both directions, looking back and forward from the current frame.

This might trigger the question: “How does the visualizer look several frames ahead if the visuals are calculated in real-time?” By using an audio buffer, which brings me to the next key issue: when I first exported a video of FAST FL0YD, I was disappointed to find that audio and video started out in sync, but by the end, the audio always finished first. Of course, this had to do with the real-time capability. When the visual processor lagged behind for a frame or two (not unusual at 60 FPS on a single CPU thread), the audio track kept moving on while the frame hole had to be filled with subsequent frames. Hence, I implemented two different modes: live mode, which always synchronizes audio and video, losing some frames in the process if your hardware cannot keep up, and video mode, which always stays true to a constant frame rate, usually taking a bit longer and playing the sound a bit slower. Utilizing multiple CPU cores and the GPU would be an even better solution, but this exceeds my current programming skills—and, frankly, the capabilities of vanilla Processing.

What also does not come with vanilla Processing is video export, for which I had to use FFmpeg, a well-established open-source framework. It features countless settings and parameters to tune in order to get good-looking videos, but after a whole day of trial and error, I decided to use h264 as a codec and yuv420p as a pixel format. These were pretty much the only settings that led to stable videos. You can still reduce the CRF (Constant Rate Factor) to increase the number of key frames, and thus the overall quality.

If I had to list possible improvements again:

- The colors and color schemes still need refinement. Currently, the colors rotate around the HSI cylinder at a user-specified rate, but selecting non-complementary colors for the Floyd circles is not possible.

- The feature allowing Floyd swarms to assume certain shapes—such as creepy messages, stickman pornography, or PowerPoint clipart—was unfortunately lost since the old version. I would like to re-implement it as soon as I figure out how to smoothen the transitions between shapes.

- I did not get the seed for the random number generator to work, not even after making my own. Therefore, the visualizer always evolves differently, even though reproducibility would be crucial when trying to export the best version of a video.

- Additionally, linking FAST FL0YD to the blockchain to create NFTs and crypto-currency will make me incredibly rich—kidding, this will never happen. For the most part, I am satisfied with the current version of the visualizer and do not plan any major updates in the near future.

Self-evidently, there are many more features in FAST FL0YD that I have not mentioned yet, so I invite you to try them out on your own. Surprising myself once again, I created my first public GitHub repository and even included release versions for Windows and Linux. Sorry, Mac users, but because you use Macs, you deserve to be punished and download Processing like in the old days, compiling and running the visualizer yourselves (to be honest, it is not that hard, not even for you).

That being said, you can view the repository here. Or, if you simply want to run the standalone EXE without installing the Processing environment, you can download FAST FL0YD here.

If you feel ready to export your own videos, simply install FFmpeg. I included a detailed README that guides you through the process and provides more technical insights into the visualizer. Have fun, and be sure to share your parameter matrix with me once you find the ideal visuals for your song.

Leave a Reply