⚠️ WARNING: The videos on this page use rapidly changing visual patterns and may cause seizures in some viewers. Some videos may also contain traces of profanity, violence, porn, inside jokes, floyds, glitches, and pseudo-randomness.

Be sure to watch in 1440p because any lower resolution will look like crap. Also, please install uBlock Origin because YouTube ads are more aggressive than my videos (which is really saying something), and I do not monetize, anyway.

“DEEP FL0YD” is a music visualizer and should not be confused with “DeepFloyd,” the “AI Research Band,” whatever makes their product newer or better than all the other generative AIs. I want to emphasize that I had videos uploaded before they launched their website.

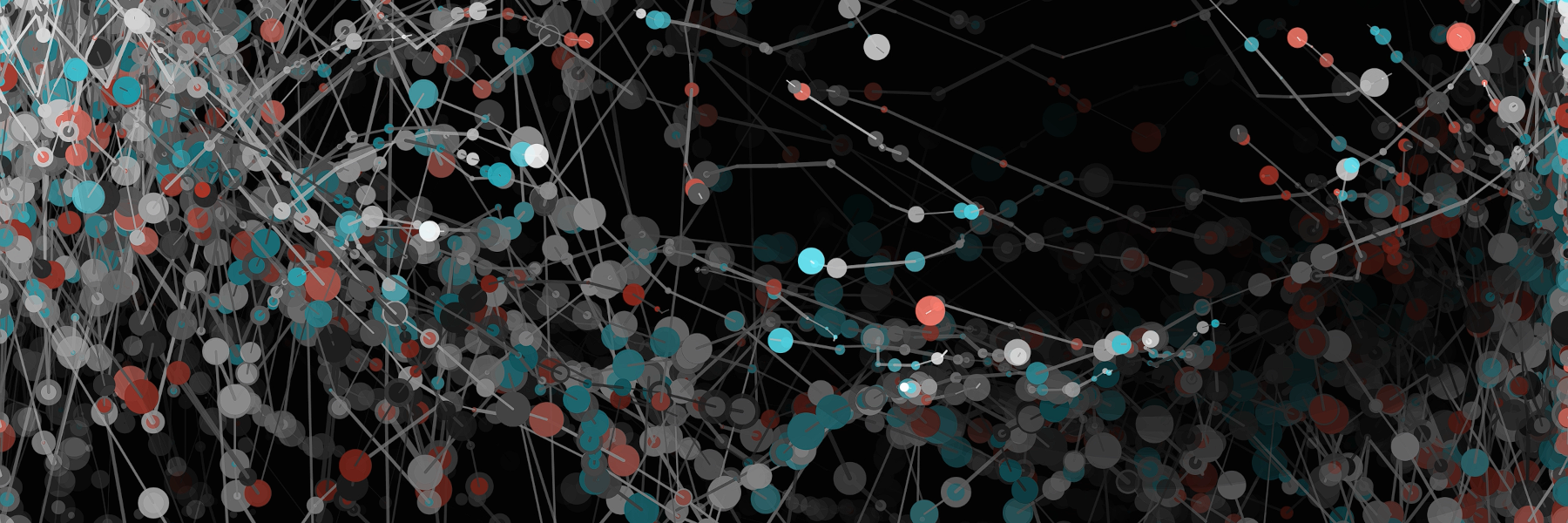

That being said, DEEP FL0YD is a music visualizer I programmed in the Processing coding language, using some MATLAB for pre-processing. In a nutshell, it determines the motion behavior of so-called “floyds,” a swarm of dots, matching the motion parameters with the music. As the dots change their position in each video frame, two circles are drawn at the old and the new position, and both are connected with a line. This looks a lot like growing and blooming sprigs, which would grow on and on if they were not gradually getting dimmed by being overlaid with an almost transparent black sheet.

As an example, watch the video below, which I promise you is one of the more tolerable ADME songs.

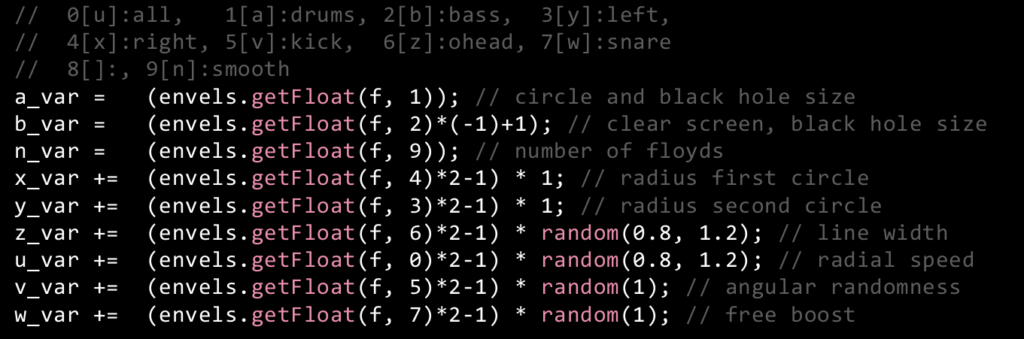

What makes DEEP FL0YD special is that the songs are uploaded as separate audio tracks, such as bass, kick drum, snare drum, overhead microphones, master, left, and right. I used MATLAB to calculate the volume (or envelope) for each audio track and video frame and to export all as comma-separated values. These envelopes are then linked to one parameter each, for example, speed, angular randomness, circle sizes, or line width. In its original form, the links are fixed, such that, for example, a high amount of kick drum would always increase the floyd speed, acting as a driving force both acoustically and visually. In practice, the links were determined by complex formulas, until I decided to randomize the links as well; much to my own dismay because in hindsight, I could have gotten more out of the videos by decreasing the amount of randomness.

However, the zeroth version of DEEP FL0YD did not react to music but had manual controls, most of all mouse position, but also keys for changing the color scheme, disabling circles or lines, randomizing the current parameters, or making all floyds magnetically rise to the ceiling. The mouse position was later replaced by the audio envelopes, but most of the key functionalities were still present in the first version, allowing manual intervention while the video was being generated.

To showcase this, watch the song “Bloom & Decay” by isn, which is linked below. In this video, my friend Daniel cross-faded multiple takes to better adjust to the feel of the music, something I should have done myself more often.

As you can see, there are repeating patterns in the floyds’ motions. These originate from a few invisible master floyds, which are subject to horizontal and vertical sine waves, and the center of mass of all visible slave floyds. In other words, the swarm targets its center as well as some periodically changing position on the screen. Naturally, the floyds are not allowed to leave the screen, instead being reflected at the borders. All this leads to characteristic motion patterns, which depend on the chosen parameters.

Next, I experimented with different styles and color schemes. Check out some representative ADME videos below and never mind how annoying the “music” is.

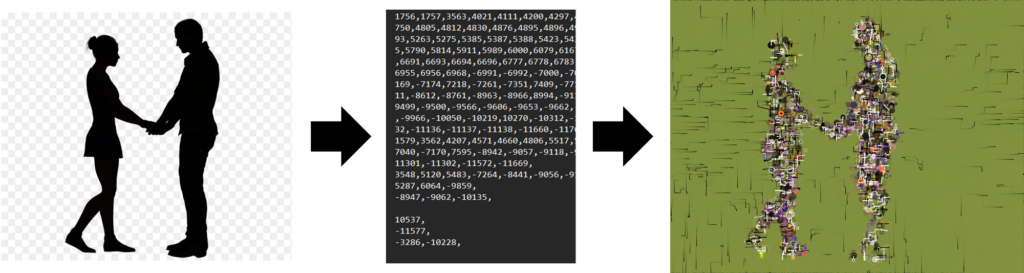

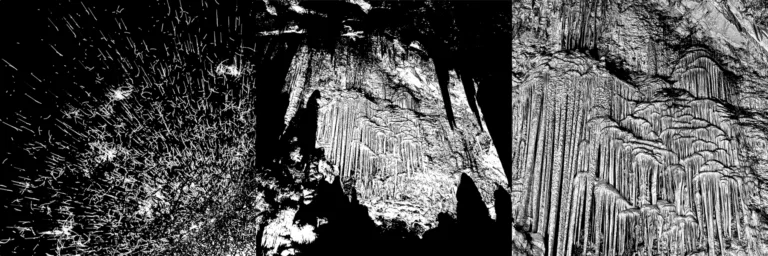

The second version of DEEP FL0YD never yielded any videos and was solely used for experimentation. I discarded the music component temporarily and focused on how images could be used to shape the motion patterns of the floyds. As a basis, I would use binary (black and white) images of clear-cut shapes. PowerPoint slides with texts, publicly available form art, and occasional nudes turned out to be ideal for the task. I gave the floyds “sight,” making them able to find the nearest shape and move towards it, moving even faster if the shape is not or barely occupied. Additionally, I made the floyds bloom only while hovering over a shape, and become thin, black lines while on the move.

After I figured out how to make the floyds imitate images, I began version three, namely “IR0N ZEPPELIN,” and reintegrated the music, but with one major change: instead of having the songs imported as separate audio tracks for kick drum, bass, and so on, the motion control parameters are computed from the EQ bands of the mix. This is how a commercial visualizer would operate, and it works because kick drum, bass, snare drum, cymbals, etc. each have characteristic peaks at certain frequencies. Thereby, I simply mapped one octave to one control parameter. It is enough to increase the action when the volume is high.

The MATLAB script receives only the exported PowerPoint slides as PNG files. I then specify a total number of slide changes (typically, the number of slides), and the script finds a corresponding number of strongest changes in the EQ spectra, providing approximately equal frame durations that are often matched with the beat. Furthermore, I allowed the images to be cross-faded pixel by pixel, only specifying a maximum duration for the fade to happen.

While exporting the binary image stream from MATLAB into Processing, I literally invented movie compression (unfortunately, I was not the first to do so). As you can imagine, saving every pixel of every movie frame separately would produce a huge amount of data even for binary images. Also, a lot of this data would be redundant because consecutive frames look mostly the same. Therefore, I only exported the pixels that changed between one frame and the next, or rather their indices, i.e., their position on-screen. The result was rudimentary, relying on text files, but it worked like a charm.

As an additional gimmick, I linearly changed the colors and especially the background over the duration of a song. Check out the song below, which is an improvisation by my friend Ole, using a modular synthesizer. If you watch only one video with sound on, make sure it is this one.

If you watch closely, you will see the floyds move in strict geometric patterns, such as triangles, squares, and other polygons. This was achieved by rounding their direction angles to discrete steps. Of course, I used polar instead of Cartesian coordinates for much of the computations, converting polar radius and polar angle to X and Y later in the process.

Lastly, I also used geometric patterns such as warped checkerboards, simply pulling them off Google Images and resizing them in PowerPoint. The cool part is that the resolution can be incredibly small and there can be “Stock” watermarks written all over—it does not affect the floyds’ ability to track the shapes.

Here is one final ADME song, combining it all in one video, which is also the last one I generated with DEEP FL0YD.

By now, you might be aware of one major drawback of the visualizer: it is not real-time capable, meaning that on an average computer, rendering time takes about twice as long as the playtime of the song. Moreover, pre-processing steps in MATLAB are required, which take even longer because the language is interpreted, unlike Processing itself, which is compiled.

And just as I mentioned before, it cannot be ignored that I overdid it with the randomness. Would I do it all again, or simply code a fourth version of DEEP FL0YD, I would surely change a lot of things. Hence, here is my list of possible updates that would greatly improve the visualizer:

- For starters, it could be real-time capable. For that, both frequency analysis and slide interpretation would need to be done in Processing or Java. Also, some advanced pre-buffering techniques of the sound files would need to be employed.

- Secondly, the user should be able to specify the order of slides and their exact fading times.

- Next, I would reskin the floyds using selected color palettes and try to achieve better visuals with fewer floyds, increasing performance and decreasing randomness.

- Also, the floyds should move more fluently from one shape to the next because making them bloom only while hovering over a shape was a cheap trick. This could be achieved by improving their path-finding abilities so that fewer floyds would be needed to fill entire images, again increasing performance.

- Finally, I am not satisfied with, albeit a little clueless as to why the videos always are butchered by YouTube compression. They are barely watchable on 1440p, never mind lower resolutions. I guess the algorithm simply is not used to the rapidly changing colors, but simplifying the DEEP FL0YD visuals would surely help.

Leave a Reply