My sister said she was struggling to find character names for her visual novel. Luckily, I had recently developed an algorithm called Babaism that creates artificial words and uses them to corrupt existing texts. Hence, it was a no-brainer to tune it a little, feed it some first names, and use it to generate names for fantasy and sci-fi characters.

For training data, I used an extensive list of given names from Wikipedia. The page is in German, but the names are from all over the world, featuring about 6500 male and 4300 female names in total (including variants and many unisex names, which consequently appear in both lists). The unique spellings required that I add many more letters to my alphabet, which I crudely divided into vowels and consonants:

Vowels: aeiouyáâãäåæèéêëíîïòóôõöøúüýāăąėęěīıōőœūConsonants: bcdfghjklmnpqrstvwxzßçðñþćċčđğļľłńňřšşżžț

These letters are then used to split the names into “syllables” or rather “tokens,” meaning groups of consecutive vowels (e.g., a or uio) and consonants (e.g., b or ttsch). I also distinguished between tokens that appear at the beginning, in the middle, or at the end of a name. These tokens are then recombined at random, based on the weighted probabilities of the original names, so that consonants are always followed by vowels and vice versa. Compared with Babaism, one additional enhancement is that the number of tokens per name is also based on the weighted probabilities of the original names, ranging between 2 and 7 tokens.

Most likely you noticed that the web version allows you to choose between female, male, or diverse names. The latter are, in fact, only the tokens of both male and female lists combined. Admittedly, the desktop version is a bit more luxurious, letting the user also choose the number of names to be generated in total. Curiously, I observed that in one million artificial names, many pre-existing ones are included. However, most seem alien due to the jumble of different languages and adorned letters. On a side note, I did not include any sort of profanity filter, so please excuse me if you stumble upon the occasional swear word or racial slur.

As you can see above, the web version of the Fantasy Name Generator is a bit clunky, working with a static PHP form instead of smooth JavaScript. This is not because I want you to buy the premium version or anything, but due to the fact that it is my first active Python script on this website. I spent the better part of a day figuring out how to execute Python scripts on a WordPress server, which runs on PHP, supposedly not the most user-friendly programming language. At least, I learned a great deal about web hosting; for example, I now can transfer files via SFTP and access my server’s command console via SSH. In case you are a white-hat (or at least gray-hat) hacker, please notify me about any security loopholes I may have opened.

In time, I might find a secure solution using JavaScript. Until then, feel free to generate and use as many names as you like for your own characters, kingdoms, spaceships, pets, children, or whatever. Chances are high that most names have never been seen by any person alive before.

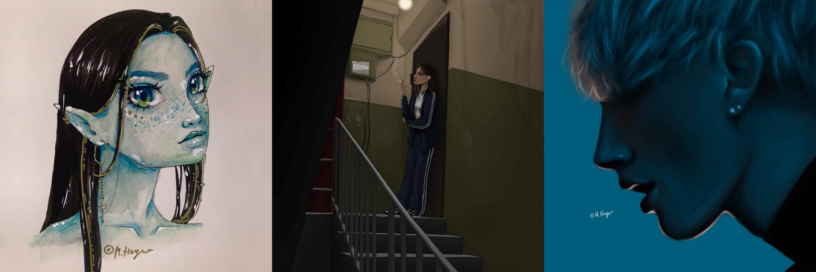

Last but not least, in case you are wondering about the exceptionally high quality of the banner image above: I use it with the permission of my talented sister Marlene (aka Nanno Cloud). Be sure to check out her Instagram!

Leave a Reply